1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

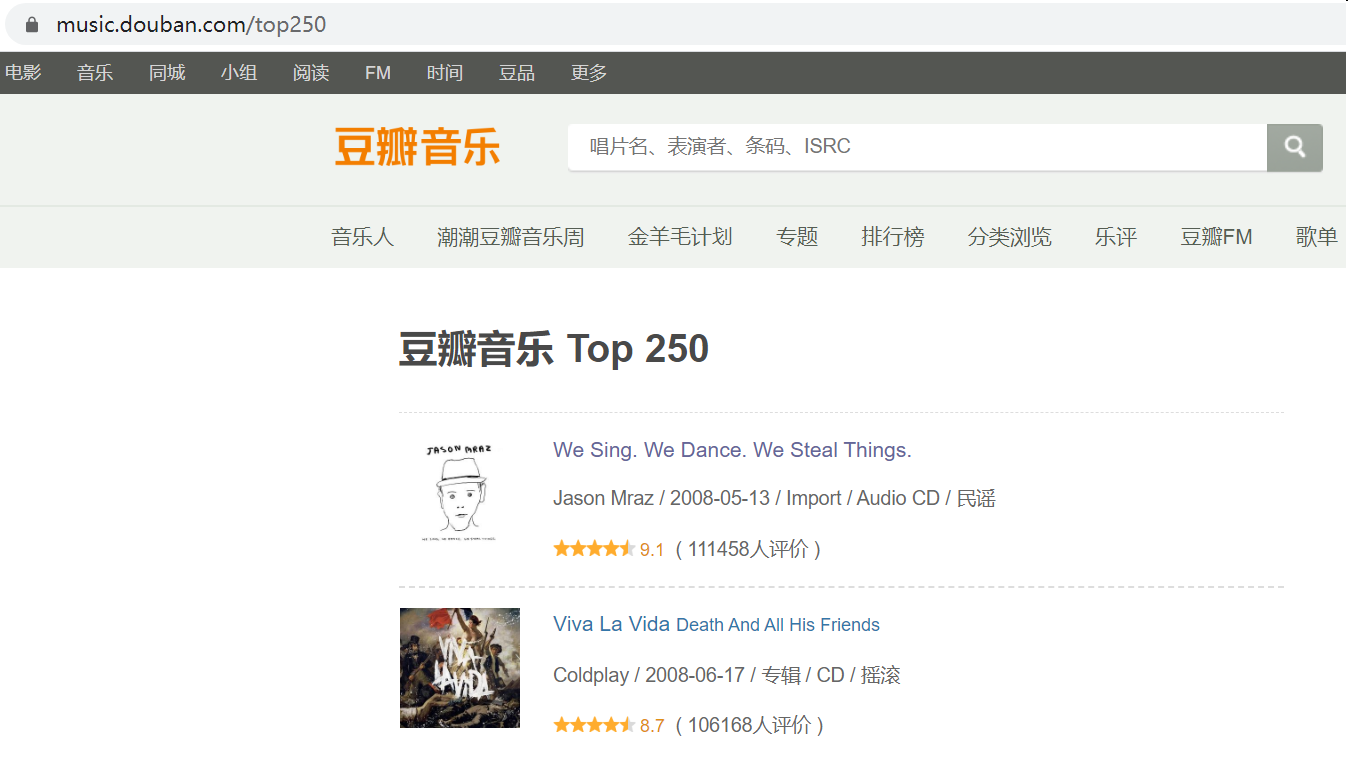

| import re

import csv

import requests

from lxml import etree

from datetime import datetime

from fake_useragent import UserAgent

headers = {

"User-Agent": UserAgent(verify_ssl=False).random,

"Connection": "close"

}

def get_music_url(url):

html = requests.get(url, headers=headers).text

tree = etree.HTML(html)

all_music_url = tree.xpath('//*[@class="nbg"]/@href')

for music_url in all_music_url:

get_music_info(music_url)

def get_music_info(music_url):

html = requests.get(music_url, headers=headers).text

tree = etree.HTML(html)

name = tree.xpath('//*[@id="wrapper"]/h1/span/text()')[0]

author = tree.xpath('//*[@id="info"]/span/span/a/text()')[0]

styles = re.findall(r'<span class="pl">流派:</span> (.*?)<br />', html, re.S)

if len(styles) == 0:

style = '未知'

else:

style = styles[0].strip()

time = re.findall(r'<span class="pl">发行时间:</span> (.*?)<br />', html, re.S)[0].strip()

publishers = re.findall(r'<span class="pl">出版者:</span> (.*?)<br />', html, re.S)

score = tree.xpath('//*[@class="ll rating_num"]/text()')[0]

if len(publishers) == 0:

publisher = '未知'

else:

publisher = publishers[0].strip()

music_info = {

"name": name,

"author": author,

"style": style,

"time": time,

"publisher": publisher,

"score": score

}

print(music_info)

save_to_csv(filename, music_info)

def save_to_csv(filename, music_info):

with open(filename, 'a', encoding='utf-8') as f:

fieldnames = ["name", "author", "style", "time", "publisher", "score"]

writer = csv.DictWriter(f, fieldnames=fieldnames)

writer.writerow(music_info)

if __name__ == "__main__":

urls = ['https://music.douban.com/top250?start={}'.format(str(i)) for i in range(0, 250, 25)]

filename = 'musicTop250.csv'

with open(filename, 'a', encoding='utf-8') as f:

fieldnames = ["name", "author", "style", "time", "publisher", "score"]

writer = csv.DictWriter(f, fieldnames=fieldnames)

writer.writeheader()

for url in urls:

get_music_url(url)

|